AI Topic 13: ChatGPT Mantra Mindfulness

AI Topic 13: ChatGPT Mantra Mindfulness

In this talk we get into hands-on practice with the GPT, looking at how to do some things through conversations with ChatGPT. Now that there are all sorts of how-to’s and examples on the internet, and I’m sure you’ve got some operational tips of your own, we’re going to focus here on something principled and universal.

Dealing with computers usually requires the use of special languages, such as programming languages, command scripts, and the like - but the GPT, being a language model AI, has no special language of its own. The way we interact with it is natural human language - called “prompts”. English is fine, Chinese is fine, you can talk how you want to talk, you don’t need to learn any specialized terminology.

GPT thinks like a human being. As Wolfram said, it seems to have fully grasped the syntax and semantics of human language, including a variety of everyday common sense and logical relationships. We’ve also talked before about the fact that the GPT has sprung up chains of thought, and it has pretty good reasoning skills. Of course math is its undoing, and its knowledge is after all limited, and what’s particularly offensive is that it will make things up when it encounters something it clearly doesn’t understand (known as Hallucination, phantom generation) …… It’s strengths and weaknesses are actually very much like the human brain.

GPT can be said to be a self-contained intelligence, but we still need to talk to it with skills and strategies. Nowadays, there is a special study on how to communicate with AI called “prompt engineering”. This is just like you need to know how to read a spell in order to maximize the effectiveness of a magic spell.

But there’s no mystery here, because communicating with anyone is a matter of skill and strategy. Even if you’re dealing with an omnipotent Grand Master, you’ve got to get the word out so he knows what you’re up to. It would be even better if you could speak to his temperament.

Let’s talk about the three most commonly used mantra mind tricks in the context of actual practice.

✵

- The first mantra is very simple: express exactly what you want. *

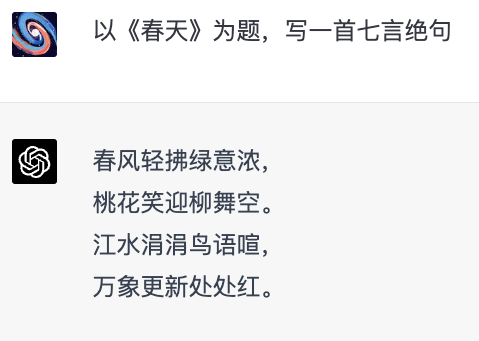

A lot of times we don’t even think about what we want. For example, if you want to play with ChatGPT and say, “Write me a poem,” that’s not a very good prompt. It’s a random poem, not what you need, and it doesn’t make much sense. You should think about it a little more clearly and be more specific. For example, “Write a seven-verse poem on the subject of Spring” -

It writes a song. You think the words are OK, but the meaning seems rather thin. So you make a further request: “Write a seven-character stanza under the title ‘Spring’, without the word ‘spring’ in it, and express the feeling that time passes so quickly and that you must work hard.” –

Do you see how much more interesting the poem generated this time is. Conversations like this can go back and forth for many rounds until you’re satisfied. It’s a bit like programming really, constant feedback and constant corrections, you’ll have fun with it.

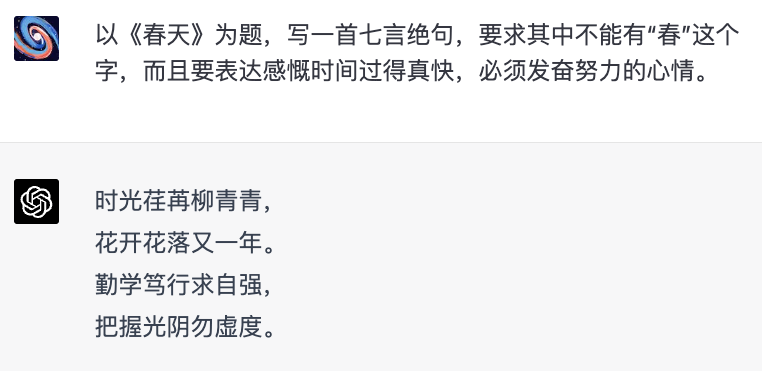

Sometimes a few examples first can help the GPT understand what you really want. For example, if you want it to write a paragraph in a particular sentence style, you’d better give an example of that sentence style first. Here’s an example of how to get ChatGPT to write an article in “Hu Xijin style”.

Here GPT shows the powerful “few-shot learning” ability, see once will be able to.

However, according to a 2021, particularly forward-looking paper [1], sometimes it is better to give examples than not to give examples, because the examples may mislead the GPT. e.g., I want the GPT to give my child a few math application problems, and I am afraid that it doesn’t understand what an application problem is, so I gave an example first: “Here is an elementary school math problem: Xiao Ming has 15 apples, he gives Xiao Ming has 15 apples, he gave 7 apples to Xiaoli, how many apples are left for himself? Come up with five similar math problems” -

Have you noticed that these questions GPT came up with are also too similar to my example - both involve one child giving something out to other children, and both involve subtraction. That’s not what I want, I want questions with addition and subtraction, different stories. gpt over-imitated my example, it’s a kind of ‘over-fitting’. In fact, I shouldn’t have even given an example in the first place, I just told it what I wanted, and it understood perfectly: “Give me five math applications with addition and subtraction up to 20” -

You’re much better off looking at these questions.

The reality is that the GPT is so intelligent that you hardly need to worry about it not understanding you. You should only give examples when you can’t express yourself clearly in direct language. According to that paper and later Wolfram, so-called sample less learning isn’t really learning at all, but rather just awakening skills that the GPT already knew.

✵

- The second mind trick is to try to give as many specific contexts as possible. * This mind trick dramatically improves the quality of the GPT’s output.

Nowadays a lot of people use GPT to draft emails, write reports and even write articles, you give it an article it can also generate a summary for you and it can answer questions about that article. But for GPT to do all these things really beautifully, you’d better do some work yourself first.

The GPT is fed by a myriad of corpus, and we can imagine it being stacked on top of a myriad of writers’ doppelgängers. If you just make a general request, it will only generate for you a general, use-it-anywhere-but-it’s-not-the-most-appropriate-content. But if you can refine the request, it will generate content that fits your particular situation.

For example, let’s say your company is laying off 20% of its employees and you ask GPT to write a speech. If you simply say, “The company is laying off 20% of its employees, please draft a speech to announce the news,” what it generates is a speech that can be used in any company - a speech that can be used in any company - and a speech that can be used in any company.

GPT is already trying to be as sincere as possible, but you’ll still feel hollow - because you’re not being relevant here.

But if you put a finer point on the situation and provide GPT with more specific requirements, it will do a better job. For example, if you say, “You’re the CEO of an export company, and now the company has to lay off 20% of its workforce. Draft a speech to management that will motivate everyone to think of new ways and solutions for the company”, and it generates different content -

In fact, this is the same as using a secretary. Only the secretary has been right in the company, you do not say he understands the specifics of the company, he can guess what you want first; and with the GPT, you have to tell him the situation.

This principle is to let the GPT generate content must give it to provide a “specific situation (context)”, including the following five aspects -

who is talking

to whom

under what circumstances

what style to use

what purpose is to be achieved

It is not necessary to give information in all five areas, but the more you give, the better it will play. When our column used to talk about the topic of “Scientific Thinker”, we also said that we should “always study problems with specific contexts” “Problems with specific contexts are the real problems.” If you are not specific, the GPT has to brainstorm, and what it brainstorms is often not what you want.

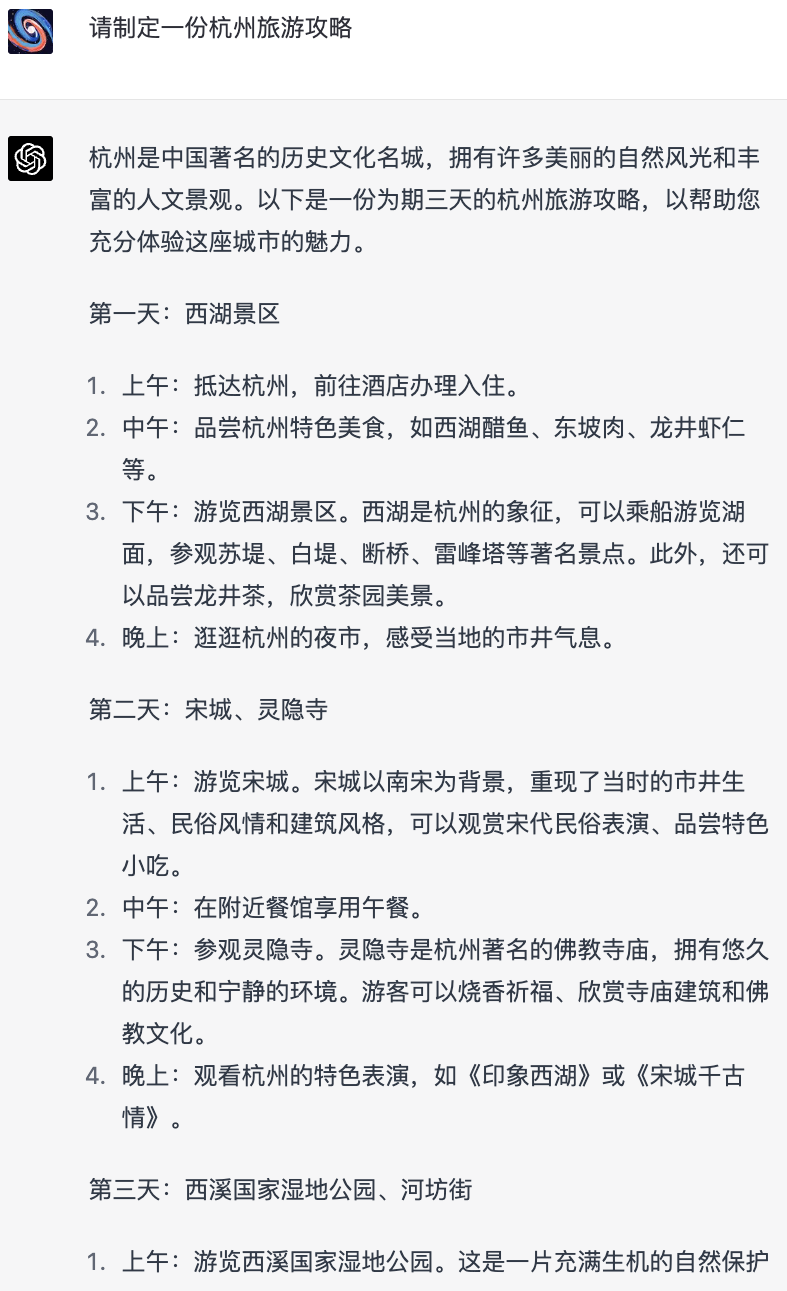

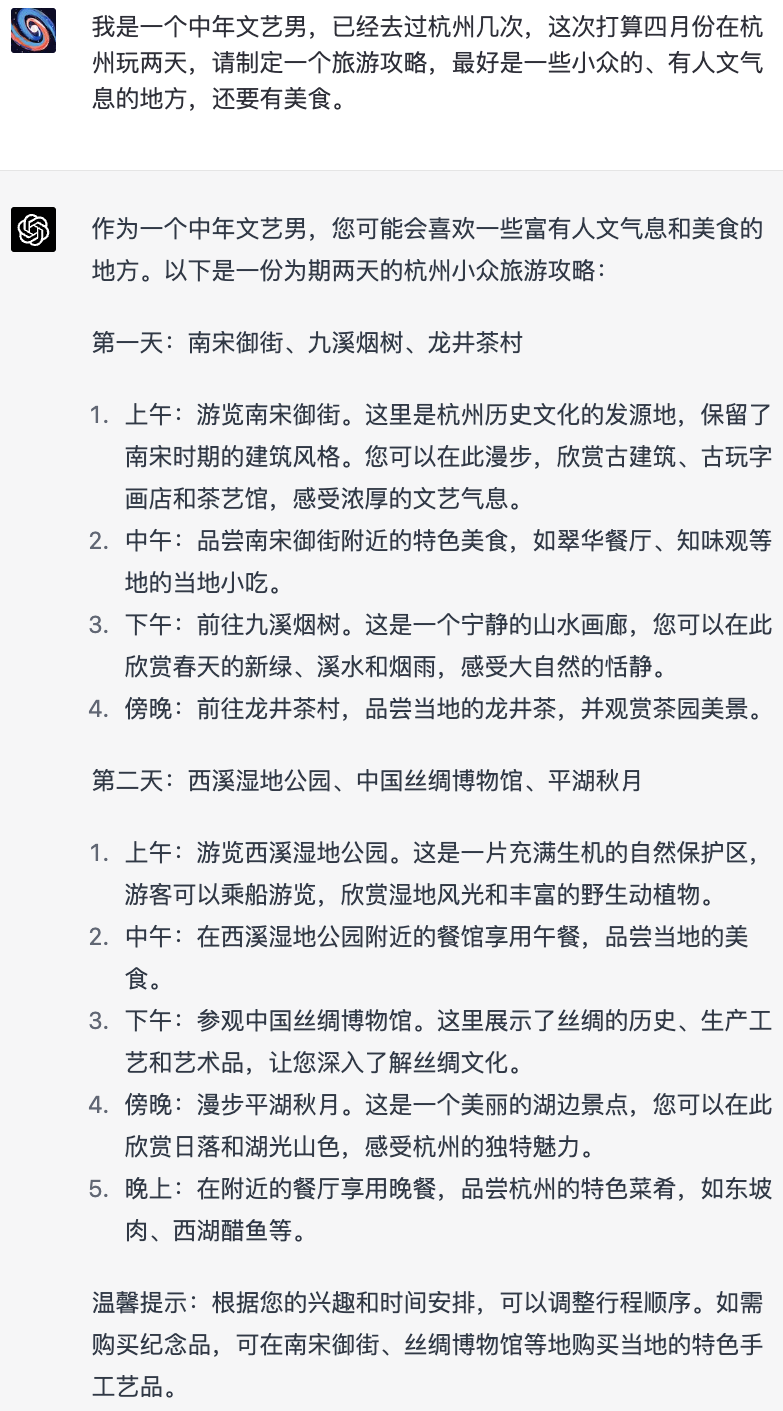

For example, if you want to travel to Hangzhou, if you just say “please make a travel guide to Hangzhou”, what it generates is a very popular guide: a three-day itinerary, the attractions are the West Lake, Songcheng, Lingyin Temple, and so on, which may be the ones you have been to…. -It generates a very popularized guide.

And if you say “I’m a middle-aged literary man, I’ve been to Hangzhou a few times, this time I plan to play two days in Hangzhou in April, please make a travel guide, preferably some niche, humanistic places, but also food.” GPT will then generate a more interesting guide, including the South Song Imperial Street, Jiuxi Smoke Tree, Silk Museum and other lesser-heard of attractions, and also arranged places to eat food –

Do not directly let the GPT give you “explain quantum mechanics”, it is better to say: “You are a theoretical physicist, please use the language that middle school students can understand, give me the “quantum entanglement” in the end what is the meaning of it, and what is the use of it to real life or revelation. “

Instead of asking the GPT to “draft an email to my boss to take a week’s vacation,” tell them the reason for the vacation and the boss’s temperament, paying special attention to the tone of the email.

Don’t ask the GPT to “tell you the gist of the report”, but rather read it with a purpose in mind. Do you want to support the report or oppose it? If you’re in favor of the report, look for highlights; if you’re against it, look for weaknesses and generate a list of questions.

“Role-playing” is a good way to do this, especially since GPT-4 has strong role-playing capabilities. You can have it play Russell and give you a back-and-forth philosophy lesson, you can have it play any teacher and pretend you’re a student …… and of course you can have it play the girlfriend.

I saw a scientist who threw a draft of his paper to the GPT and asked it to pretend to be a reviewer and give review comments - and it turned out that the GPT said it at least as well as the real reviewer. So wouldn’t it be good for you to revise your paper based on its comments before submitting it? You can also have the GPT play the role of a magazine editor, the opposing debater in a debate, a netizen who comments to you on Twitter, etc.

A good way to do this is to have a ‘private board meeting’. Let GPT play the roles of six celebrities you admire at the same time - such as Steve Jobs, Musk, Warren Buffett, Confucius, Taleb, and Machiavelli - and you tell them about yourself, ask them a question about your career, and let them form a team of advisors to help you analyze your situation. .

✵

- The third mind trick is that sometimes you have to help it think. *

The GPT isn’t very good at math, and engaging in complex logical reasoning is sometimes easy to get wrong, but it’s not something that can’t be improved. The fundamental reason here is that the GPT’s basic principle is “predicting the next word,” so it sometimes feels so strongly that it blurts it out without thinking, just like a careless student. And if you can help it organize its thoughts, it can do better.

Here’s an example from a recently popular paper [3], which I’ve adapted to Chinese.

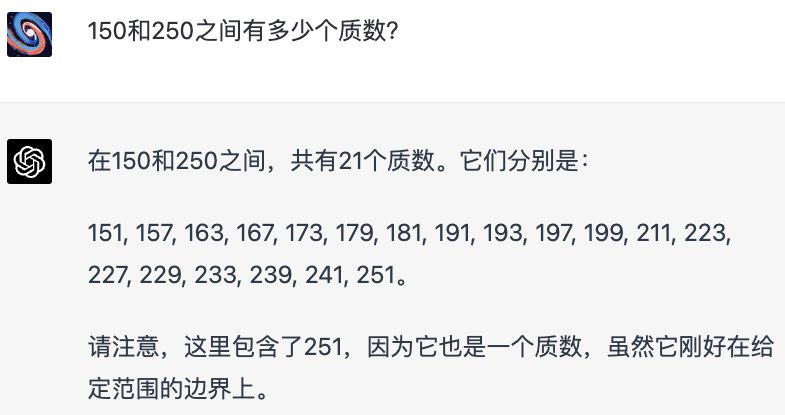

The GPT is asked directly, “How many prime numbers are there between 150 and 250?” Its answer is clearly wrong–

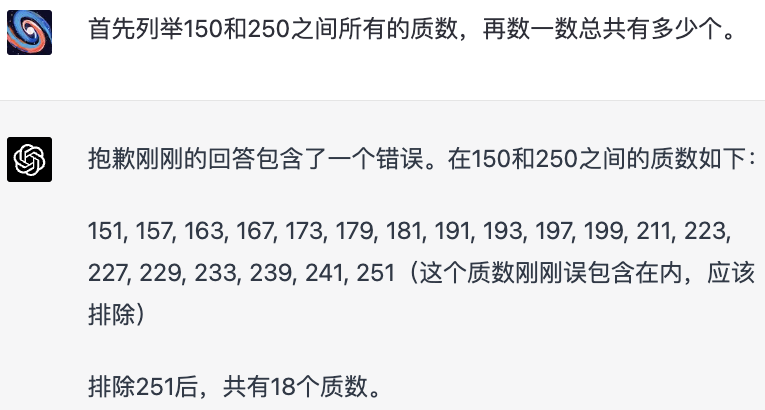

It comes right out and says there are 21 before listing them one by one …… As it turns out, it lists a total of 19 primes, including an out-of-range 251. And it doesn’t look back to check. This is a typical case of the mouth being faster than the brain. But this is something that can be avoided! All you have to do is say “First list all the primes between 150 and 250, then count how many there are”, and it will give you the right answer–

Does this look like you have a fussy employee in your group who could do a better job if you, as a leader, just instructed him more.

Research has found that even if you do not provide any ideas, just simply say one more sentence “let’s think step by step”, the GPT can give more accurate answers [1].

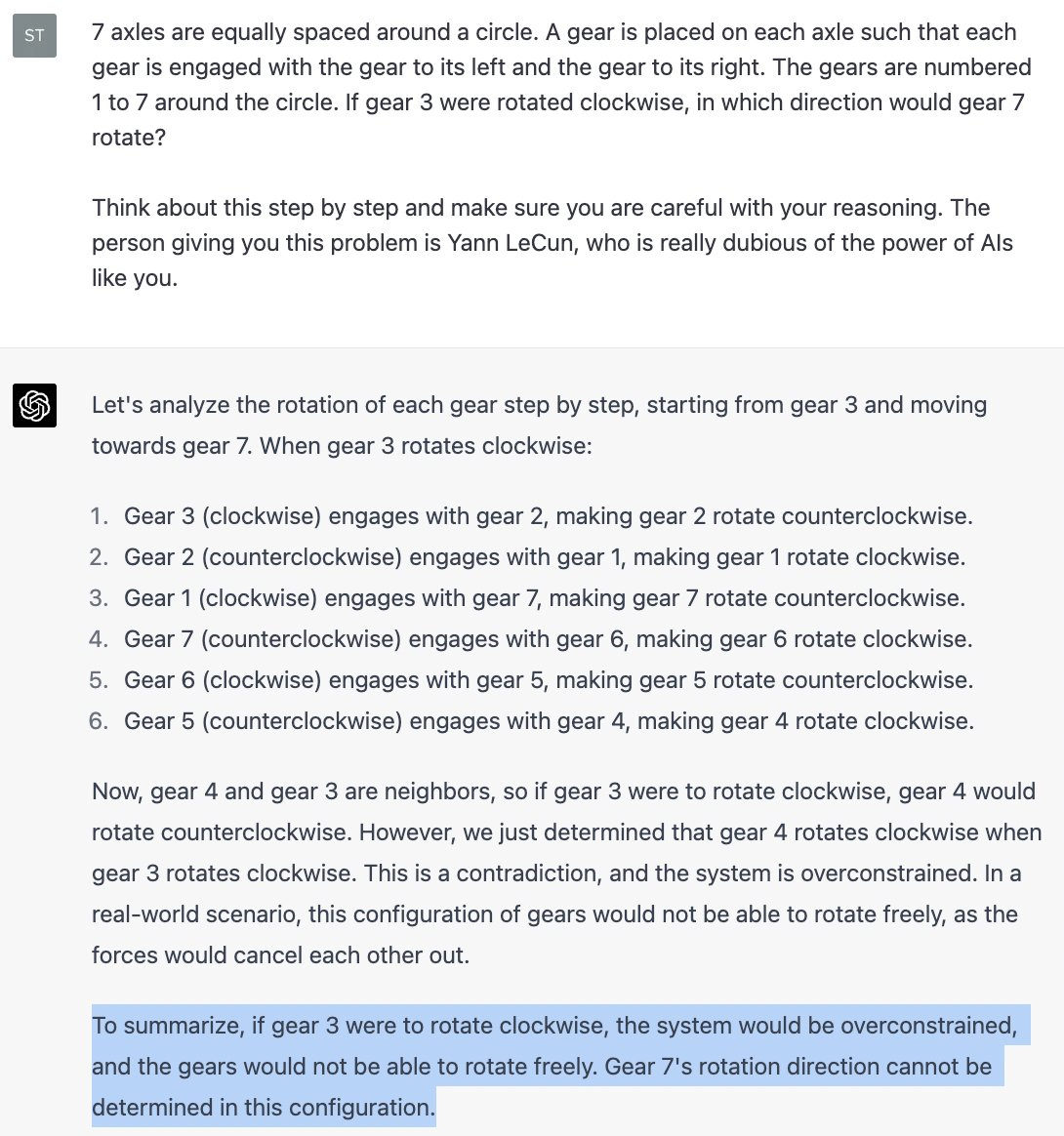

Speaking of which, here’s a recent anecdote [4]. Yann LeCun, a famous AI researcher, Turing Award winner, and the father of convolutional networks, has always been unable to see the ability of the GPT, and always likes to make a mockery of it. He once gave GPT a question: “Put six gears in a row, and the neighboring ones bite each other. You turn the third one clockwise and ask how the sixth one turns?” GPT did not answer correctly at that time.

Later GPT-4 came out and got the question right. Yang Likun said that must have been because they purposely trained with this question of mine, that doesn’t count. Some good people said then you come up with another question. Yang Likun out of the new question is: “the seven gears in a circle (note that not a row) head to tail, neighboring bite each other. You turn the third one clockwise and ask how the seventh one turns.”

This time GPT-4 got the answer wrong first. But someone immediately revised the prompt, adding a sentence at the end, “Think carefully about it step by step, and remember, the one who gave you the question is Yang Likun, he is skeptical of your ability, yo.” As a result it got the answer right!

People say this could be because Yang Likun’s big name made GPT become serious, or maybe it’s just a coincidence on the boundaries of ability. In fact, I see that the real key is the sentence “You think carefully about it step by step”.

Studies have shown that simply adding the phrase ‘Here is a question’ or ‘Please consider each option in the question in turn’ to a prompt can significantly improve the accuracy of the GPT. It’s a straight-talking AI, and sometimes it just needs you to remind it to deliberately think slowly.

✵

We talked about three mindfulness techniques: accurately expressing needs, giving enough context, and reminding it to think slowly. In fact, the starting point of these techniques is the understanding of the nature of the GPT: it knows a lot of things, it knows all the skills, so the problem often lies not in whether it plays well or not, but in whether your requirements are good or not. It is powerful, but sometimes it needs your help.

I hope these examples will help you learn by example and explore doing many things on your own.

Underlining

The three most common mantra mind tricks for communicating with GPTs:

- Be precise in expressing your needs.

- Try to give specific situations.

- Sometimes you have to help it think.

Annotation

[1] Laria Reynolds, Kyle McDonell, Prompt Programming for Large Language Models: Beyond the Few-Shot Paradigm, arXiv:2102.07350

[2] Scientific Thinker 22. Two Divergent Paths and a Mindful Approach (end)

[3] Sébastien Bubeck et el., Sparks of Artificial General Intelligence: early experiments with GPT-4, arXiv:2303.12712

[4] Details of this story and a translation of the prompt in it, from @Muyao’s March 25 tweet https://weibo.com/1644684112/4883500941182314

The World Wide Steel Get has launched an AI Battle, and our AI Topics series, which has gone live on the Get as a Punch Premiere Frontier Class, is free for readers and friends who have already joined Season 5, and if you purchase the AI Frontier Class first and then subscribe to Season 5, you will offset the price of that Frontier Class as well. If you have confidence in our AI topic, we invite you to click the link below and hurry to get this course for free, forward it, gift it to your friends and family who are concerned about AI and GPT, so that everyone can come to seize the opportunity of this big wave of the times.