AI Topic 14: The GPT Worldview So Far

AI Topic 14: The GPT Worldview So Far

A few months have passed since the GPT wave detonated, and some of my perceptions are stabilizing, so let’s sort out the current situation in this talk.

Facing a new thing, you think it’s great when you come up, and then you go around proclaiming how great it is, which is a bit of risky behavior: because maybe the wave will pass soon, and it turns out that it’s not as great as you imagined at the beginning, and you’ll feel quite silly at that time.

But I think that’s the right thing to do. Being excited and startled at the sight of something new just proves that your heart still surges and you’re not stuck in a cognitive fixation. This is much stronger than seeing something and explaining it with your own old worldview, saying ah, this I figured out thirty years ago ……. To allow yourself to continue to grow, you have to dare people to call you stupid in order to do so.

GPT is by no means something that can be understood by a thirty year old idea. Several key breakthroughs in the last few years have revolutionized the face of neural network and language modeling research.

In my humble opinion, all books published before 2021 that talk about AI are now collectively obsolete.

✵

Is there still a fundamental difference between AI and the human brain? What the hell is a language model?

In February 2021, in the fourth quarter of our column, we used to show you the following picture [1]–

Computer vision expert Andrej Karpathy used this photo in a blog post [2] in October 2012, when his argument was that it would be very, very difficult to get AI to be able to read this photo.

The photo is of Obama and a few of his associates in a hallway, and there’s a dude standing on a scale weighing himself - what he doesn’t know is that Obama is behind him, putting his foot on the scale and putting a little weight on him. Everyone around him was laughing.

For an AI to understand the funniness of this scenario, it would have to have some ‘common sense’ first: what the scale is for, that having an extra foot on the scale increases the reading, that Obama was playing a prank because modern people want to lose weight and are afraid of gaining weight, and that it was funny for Obama to make this joke with the respect of the President …… these Common sense will not be systematically listed in any book, it’s all stuff that we humans use daily without knowing it, it’s ‘tacit knowledge (tacit knowledge)’.

How do you teach an AI this tacit common sense?

There’s a computer scientist named Melanie Mitchell who was a student of Shida Hou, and I first learned about evolutionary algorithms from her book. Mitchell’s 2019 book called AI 3.0 (Artificial Intelligence: A Guide for Thinking Humans) also talks about this. At the time she used tons of examples of how difficult it is to teach AI common sense ……

Difficult indeed. You can’t just write down all of human common sense line by line and program it into a program for the AI to learn, that’s simply too much to write. Right?

So we all lament the difficulty of this task.

…… Unbeknownst to us, all that lamentation is now obsolete.

✵

GPT-4, can read that picture.

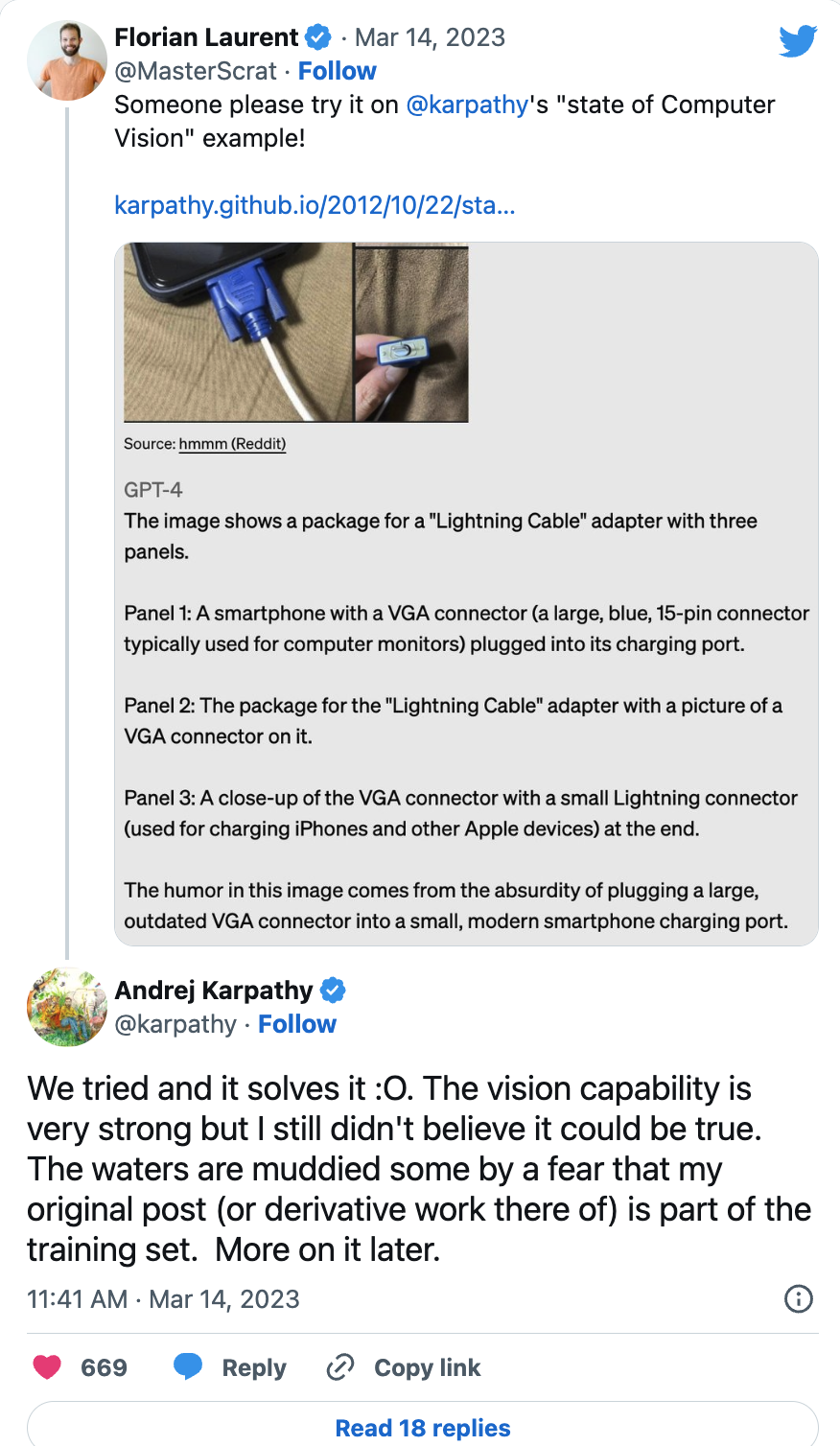

The day GPT-4 first came out, there was an example in the OpenAI manual. In one picture, a VGA plug that would have been used to connect an old monitor was plugged into a cell phone, a misplaced joke - the

And GPT-4 reads it, it clearly states where the punchline of the picture is.

Someone immediately thought of the Kapati picture about Obama and the scale and asked Kapati on Twitter if they could give that picture a try for GPT-4? Kapati immediately followed up by saying we’ve tested it, it read it, solved it–

Kapati’s only concern is that maybe OpenAI has trained GPT with that picture …… But I think that concern is redundant; by all accounts, GPT-4 is fully capable of reading such a diagram.

Now you shouldn’t be surprised by this at all. As we mentioned earlier, I once asked ChatGPT if a baseball bat could fit in a person’s ear. Why can the Monkey King’s golden rod be put in the ear? It answered well, it has common sense.

But how did it read it? How did this AI learn human common sense?

✵

In March 2023, NVIDIA CEO Jen-Hsun Huang and OpenAI Chief Scientist Ilya Sutskever had a conversation [3]. In this conversation, Sutskever further explains what GPT is all about.

It’s true that GPT is just a neural network language model that’s been trained just to predict what the next word is going to be - but if you train it well enough, it gets a pretty good handle on all sorts of statistical correlations between things, says Sutzkoffer. And that means that what the neural network is really learning is actually “a projection of the world” -

Neural networks learn more and more about the world, people, aspects of the human condition, including their hopes, dreams, motivations, and their interactions with each other and the situations we find ourselves in. Neural networks learn to compress, abstract, and represent this information in a practical way. This is what is learned by accurately predicting the next word. And, the more accurate the prediction of the next word, the higher the fidelity and resolution of this process.

- In other words, it’s not really the language that GPT learns, it’s that real world behind the language! *

Let me give you an analogy. There is a book in Zen called “The Record of the Pointing Moon”, which uses an allusion to the Sixth Patriarch Hui Neng in the beginning. Hui Neng said that truth is like the moon, and those texts of the sutra are like the finger pointing to the moon: you can follow the finger to the moon, but what you want is not the finger but the moon. Now the corpus used to train the GPT is the finger, and the GPT captures the moon.

That’s why the GPT has got common sense. That’s what it figured out on its own from the myriad of corpus.

So, you say, could it have caught the moon by reading the text alone? Maybe yes, or at least to a significant degree it can. Or else? Isn’t that what we humans do when we read?

✵

Maybe you need to be more enlightened, maybe you just need to read more.

More makes a difference. More leads to ‘emergence’.

One of the earliest key breakthroughs happened in 2017, according to Greg Brockman, president of OpenAI, in a TED talk [4] in April. At the time, one of OpenAI’s engineers trained a very simple language model using Amazon product reviews, and that model was clearly analyzing syntax, but it automatically gained a sense of semantics and was able to determine and set whether the sentiment in a review was positive or negative.

Then GPT used the Transformer architecture.

Then around 2021, GPT had the ‘enlightenment’ and ‘emergence’ we talked about earlier, and automatically had the ability to ‘learn from fewer samples’, grow inference, and have a chain of thought.

There is another interesting feature. When you talk to ChatGPT, whether you ask a question in English or Chinese, as long as the question is not directly related to Chinese culture and history, the quality of its answer is almost the same. Of course, if you ask about China, it’s better to ask the question in Chinese, probably because only Chinese can express it accurately; but for general questions, such as scientific questions, it doesn’t make any difference to GPT which language you use. Why is that?

At first I thought that the GPT translated Chinese into English, then thought about it in English, and then translated it back into Chinese to answer. The more I used it, the more I realized that this was not the case. It doesn’t have a specialized translation step. It doesn’t care which language you enter the prompt in, it goes directly from the same place in the same neural network, and the language is just an expression interface.

It’s the same as if most of my physics expertise is in English, but if you ask me a physics question, it doesn’t make any difference to me whether you use Chinese or English. I can’t say whether I think about physics in English or Chinese, I don’t recite any papers, I think directly.

- That is, GPT, the language model, captures that thing behind the language. *

In a March podcast interview [4], Sutzkoffer also gave an example. He said that even without the multimodal features, GPT already has a good understanding of colors by simply learning from the text. It knows that purple is closer to blue than red, and it knows that orange is closer to red than purple ……

It has read those colors in different corpora, but it remembers not the specific corpora, but the world the corpora represent. It tacitly grasps the relationships between those colors.

✵

GPT training is not simply memorizing a text; it is feeling the moon through its fingers.

It’s something we didn’t expect at all a few months ago, and it’s a worldview level change.

The AI is grasping the common sense of the world based on text alone. Yes, language is just an incomplete representation of the real world, and a lot of things are left unsaid between the lines; but through language alone, the neural network is also able to grab a little bit of what’s behind it.

It can explain, it can reason, it can understand jokes in pictures, it can write and program. If that’s not understanding, what is?

Things are evolving in the direction of ‘AI is not fundamentally different from the human brain, the language model is a projection of the real world itself’.

GPT’s automatic emergence of semantics from syntactic analysis is the most critical step towards AGI. This is probably the most important discovery of mankind in the 21st century. I don’t think we need to talk about the Turing Prize, if you agree that AGI is alive, maybe we should give a ‘Nobel Prize in Physiology or Medicine’.

✵

- Violent decryption has made the language model so powerful, so what’s the next step? It’s making it structurally smarter, and, more importantly, domesticating AI. *

In a conversation with Jen-Hsun Huang, Sutzkoffer talks about what OpenAI is doing right now. the GPT already knows a lot about the world, it knows a lot, but it needs to learn to express itself strategically.

A wise man is no more learned than a man who asks and says everything. openAI doesn’t want the GPT to say everything, they have to teach it to say things that people will readily accept, which means things like being politically correct, being easy to understand, being more helpful, etc. The GPT needs to become a little more subjective, preferably describing the moon in some way that is consistent with mainstream values.

And that’s not something that can be solved by feeding the corpus pre-training, it requires ‘fine-tuning’ and ‘reinforcement learning’. Sutzkoffer says OpenAI uses two methods, one that lets people give it feedback and one that lets another AI train it.

What he’s talking about is the so-called “AI alignment problem,” or aligning the goals and values of AI with those of mainstream society, which is a hotly debated issue right now. As we mentioned earlier, companies are now talking about values, and ‘alignment’ has become a buzzword.

The winds of AI R&D have changed.

In early April, OpenAI CEO Sam Altman gave a presentation at MIT [6] saying that OpenAI is no longer pursuing adding parameters to the GPT. This is because he estimates that the rewards of scaling up models have been diminishing.

To put it bluntly, ‘diminishing marginal benefits’. At this GPT-4 scale, if you increase the number of model parameters by a factor of ten, your training costs, all kinds of expenditures may have to increase by more than a factor of ten, but the model won’t perform any better by a factor of ten. And you will be subject to physical limitations, how many GPUs, how big a data center, how much power you consume, all of these are capped.

That’s why Altman says that the direction of research now is to improve the architecture of the model, and Transformer, for example, has a lot of room for improvement.

Altman also said OpenAI is not training GPT-5, now the main work is to make GPT-4 more useful. This is also in line with OpenAI’s previous hints: let the AI evolve a little slower, so that humans can adapt.

So what we can expect is that the big model violence breaking phase has come to an end for now. * Now that AI has a significant degree of intelligence, the primary issue for the near future is not to make it smarter, but more accurate, more useful, and more socially acceptable. *

This all sounds great. But as far as I know, for some mathematical reason, it’s not possible for OpenAI to completely “control” the GPT. We’ll talk about that next.

Notes

[1] Elite Day Lessons, Season 4, The Difficulty of Daily Use and Not Knowing It

[2] Andrej Karpathy, The state of Computer Vision and AI: we are really, really far away. Oct 22, 2012, http://karpathy.github.io/2012/10/22/state-of -computer-vision/

[3] https://www.youtube.com/watch?v=ZZ0atq2yYJw The part we are interested in starts at minute 21.

[4] https://youtu.be/C_78DM8fG6E

[5] https://www.eye-on.ai/podcast-archive, March 15

[6] https://www.wired.com/story/openai-ceo-sam-altman-the-age-of-giant-ai-models-is-already-over/

Getting to the point

- what GPT learns is actually not the language, but the real world behind the language. gpt, the language model, captures that thing behind the language.

- What we can expect is that the big model violence solving phase has come to an end for the time being. Now AI has a considerable degree of intelligence, the primary problem in the near future is not to make it smarter, but more accurate, better use, more socially acceptable.