AI Topic 7: Smarter Society

AI Topic 7: A Smarter Society

The book Power and Prediction was just published less than six months ago, but it’s not at all about ‘generative’ AI like ChatGPT, so maybe the author didn’t expect generative AI to be so hot. The book’s entire expectation of AI is to make predictions. It is easy to understand why these three economists cut from this angle, because the improvement of economic efficiency by prediction is macro and decisive: prediction promotes a more efficient allocation of resources.

Simply put, AI’s predictions make society smarter.

As we said earlier, there are three levels of popularizing AI: ‘point solution’ ‘application solution’ and ‘system solution’. This talk will briefly talk about how to implement a system solution in an organization or a city.

The book Power and Prediction gives two examples, insurance and healthcare.

✵

I’ve heard that the most recent layoffs due to AI replacements have been in insurance companies. It kind of makes sense, after all, the most important thing in the insurance business is making predictions, and AI is very good at predicting in such data-intensive areas.

Let’s take homeowner’s insurance as an example, where all the business can be broken down into three main decisions - the

marketing: analyzing the population of potential customers to see how valuable they are, what is the probability that they can be converted into customers, and deciding how much effort to put into getting them;

underwriting: calculating a reasonable premium based on the value of the home and the risk of an accident, which should be both profitable for the company and price competitive in the market.

claims: once something happens to the house and the customer claims, you need to assess whether that claim is reasonable and legitimate and expedite the claim.

The predictive part of all these decisions can be handed over to AI. for example, some insurance companies have now automated claims. For example, some insurance companies have automated the claims process. If your house is hit by a hailstorm and the roof is damaged, the insurance company doesn’t need to send someone to the scene to check it out - you take a picture and send it to them, and the AI will take a look at it and give you an estimate of how much you should pay for the claim, which is a great way to save time and effort.

But this is only a point solution. The system solution is that the insurance company should not only calculate the money with you, but also should intervene in your maintenance of the house.

Today’s insurance companies raise your premiums when they see that your home is more at risk; an AI-enabled insurance company will use the premium as leverage to require you to take action to reduce the risk.

For example, 49% of house fires in the U.S. are the result of cooking at home - not cooking in general, but mainly frying as a way of cooking. Some families never cook fried food, and some families do a lot of deep-frying, so it doesn’t make sense to make those two types of families pay the same amount for fire insurance. In the past, insurance companies had to charge them the same premium because they didn’t know whose homes loved deep-frying.

Now insurance companies can do that. Ask if you can install a device in your kitchen that automatically records every time you use deep frying. The customer’s first reaction is surely to say no, saying it involves my privacy. But if the AI prediction is accurate enough, the insurance company can talk to the customer and say that if you allow me to install this device, your premium can be reduced by 25%. Do you think the customer will accept it?

Another example, the insurance company, based on the AI prediction, feels that the plumbing in your house is a bit old and prone to problems. It can come to you proactively and say can I offer a little subsidy for you to fix the pipes in your house?

At the moment, insurers are reluctant to do this because it would mean lower actual premiums in the first place - insurers prefer markups to markdowns. But if the AI is accurate enough, the insurer can see that there is a tangible reduction in disasters, and claims costs are bound to go down, then its profits are increased. Then doing so has the double benefit of not only insuring, but also reducing disasters.

And all of this is only possible if you can do the math very clearly.

✵

A particularly common condition in hospital emergency rooms is a patient with chest pain. For this condition the doctor must determine if it is a heart attack, and if it is a heart attack it must be dealt with urgently. But the problem is that emergency physicians don’t have a good way to diagnose it.

The usual approach is to run a formal test, and heart tests can be harmful to the patient. Accurate tests require a cardiac catheterization or the like, which can cause a direct trauma to the body; or even just the simplest X-ray or CT, which are radioactive.

So two economists invented an AI diagnostic system that predicts whether a patient is having a heart attack or not, and whether or not they need further formal testing, based on a few symptoms on the outside of the patient. Studies have shown that this AI system is more accurate than the diagnosis of emergency doctors.

Comparisons with the AI revealed that many patients who should not have had invasive tests were asked by emergency physicians to have invasive tests. This seems understandable - after all, hospitals can charge more for having patients undergo tests. But the system also found that there were a lot of patients who should have gone for tests that the ER doctors didn’t send them for and sent them home - and some patients missed out on treatment for this, even resulting in death.

To put it this way, if you switch to diagnosing with AI, not only is it great for the patient, but it’s also good for the hospital. Your workflow doesn’t need to change, your diagnostic time is still reduced, and you’re not charging less, right?

But it turns out that hospitals are mostly reluctant to adopt AI.

Hospitals, are a very conservative type of organization. Probably because there are so many new technologies waiting to be adopted, hospitals are very reluctant to adopt new technologies. Every time a new technology is adopted, it requires retraining doctors and reconsidering processes. There are also risks associated with new technologies, which sometimes work well in testing and then don’t work so well once they’re in use. New technology also affects the distribution of power across departments, with all the attendant problems.

That’s why hospital reform is the most difficult. But what would emergency rooms look like if hospitals could systematically adopt AI diagnostics?

A person feels chest pain and calls the hospital. The hospital AI directly predicts if it’s a heart attack by his description of the symptoms, perhaps combined with the readings from the smartwatch he’s wearing. The doctor, based on the AI’s report, could tell the person not to come to the hospital at all if he thinks he’s fine. If they determine that the person is indeed having a heart attack and it is serious, they will send an ambulance there directly, and the doctor in the ambulance will also carry instruments that can alleviate the heart attack, and go to the patient’s home to first take some means to buy salvage time.

This is a change in the entire emergency medical process.

✵

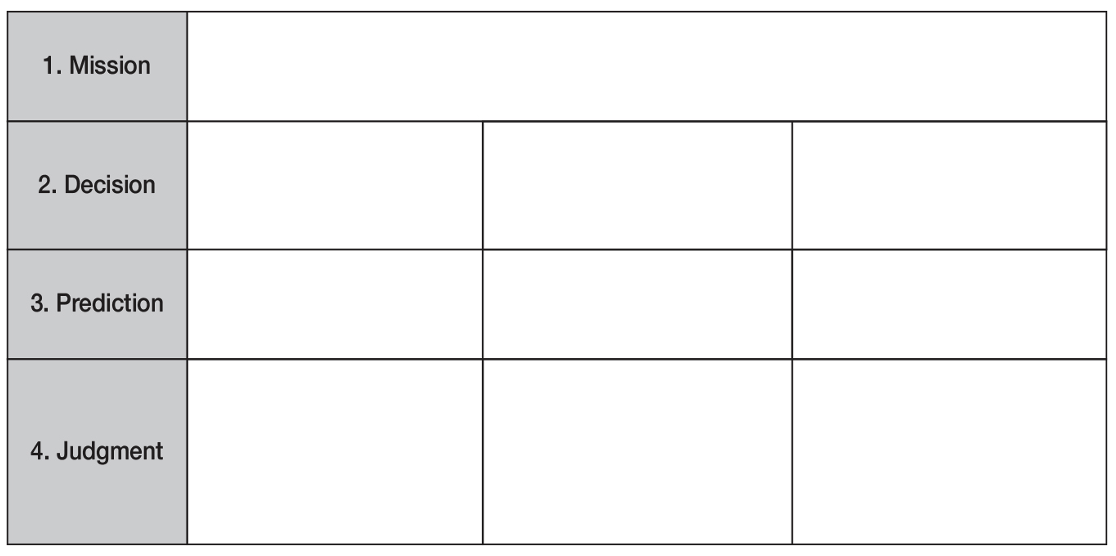

In the book Power and Prediction, any organization can be seen as a decision-making organization. The book engages in a strategic program of applying AI, starting with filling out a form - the

The first item on the form is the core mission of the organization, which remains the same, with or without AI.

Then several decisions involved in the core mission are listed, marking which departments make these decisions.

Then divide each decision into two parts, ‘prediction’ and ‘judgment’, and list which departments are currently responsible for these tasks.

Then consider how each department involved will be affected if prediction is handed over to AI. …… Consider organizational changes along these lines. We won’t go into the operational details here, let’s focus on trends.

✵

The transition from point solutions to system solutions for AI won’t be long because there is a trend for point solutions to drive system solutions.

One particularly good example in the book is opening a restaurant. For restaurants, preparing ingredients is a game about uncertainty. Guests can only order what’s on the menu, which gives you a certain amount of ease in just preparing a specific few ingredients. But you’ll find that guest ordering is volatile, with a burst of this and a burst of that, and ingredient consumption is uncertain. Let’s say you order 100 pounds of avocados every week. Sometimes 100 pounds is too much and you have to throw it away, and sometimes it’s too little and you don’t have it for the dish your guests ordered.

Now let’s say you use AI, and the AI can predict exactly how many avocados you’ll need to order next week. So you sometimes order 30 pounds a week, sometimes 300 pounds. You’ve reduced waste and you’ve secured supply, and your bottom line has improved.

But notice that because your uncertainty is reduced, your upstream supplier’s shipping uncertainty is increased. He loved it when you ordered 100 pounds every week, and now that you’re changing it up, his sales are fluctuating. What does he do? He has to use AI as well.

Where he used to regularly purchase 25,000 pounds of avocados every week, now he sometimes orders 5,000 pounds and sometimes 50,000 pounds. So what do you think happens to his upstream? You also have to use AI …… as a way to push all the way up to the farmer who grows the avocados, and you also have to use AI to predict the market fluctuations in order to do that.

In supply chain management, the volatility of one company can cause significant fluctuations in the entire supply chain, which is known as the “Bullwhip Effect”. The Bullwhip Effect leads to increased inventory, lower service levels and higher costs, among other things. This thought experiment tells us two things.

*One is that to use AI it’s best if society as a whole coordinates together and everyone uses AI.

- The other truth is that applying AI may amplify social volatility at a moment’s notice, and we’d better be careful. *

✵

How can we reduce the vibrations of AI on society? * A good way is to simulate it first. *

Tell an interesting story. The America’s Cup is a historic event, but the teams are very tech-savvy. People are always trying to figure out how to improve the design of the sailboats. There’s an interesting dynamic here, which is that the design of the sailboat changes, and the skill with which a person maneuvers the sailboat has to change with it. You have to find the best way to maneuver this new design to know if it’s good or not.

Traditionally, when you design a new type of sailboat, you have to get athletes to try to sail it in a variety of different ways first. It takes time for an athlete to become proficient in a new method, and when they do, they don’t know if it’s the best. Maybe a different design and a different set of driving methods would work better …… But with so many pairings, how do you have time to train new methods over and over again? The bottleneck of innovation here is the athlete.

In 2017, Team New Zealand, together with McKinsey & Company consulting, invented a new way to test drive new sailboats with AI instead of athletes.

Design a new sailboat, first use AI to simulate the sailor’s operation, use the reinforcement learning method to train the AI first, let the AI find the best way to drive this type of boat. This can be much faster than human athletes.

Of course you can’t put the AI on the course in a real race. But after finding the best combination of boat type and maneuvering method, you can let the AI teach the human athlete how to drive the sailboat. That’s how the athlete learns the best way to maneuver without having to participate in repeated trials.

The spirit of this story is that any new method can be practiced in a simulated environment first. This is actually what Tim Palmer’s book “Primary Doubt” says is the value of a ‘virtual earth’: any new policy, test it first in a virtual earth and see what comes out of it.

In fact, as recently as 2022, Singapore has already created a ‘Digital Twin’, a one-to-one replica of a virtual Singapore. It took tens of millions of dollars to develop this system. Now the Singaporean government is engaged in urban planning, any action can be tested in the virtual Singapore first.

For example, if Singapore wants to use AI to manage traffic, and is worried about whether it will cause large fluctuations in the entire system, it can first test this practice in the virtual Singapore.

✵

The trend now is to move from individual companies using AI for individual tasks, to systematic use of AI, to an entire society revolving around AI. aI will make our society smarter. There is a huge amount of work that can be done by both companies and government departments in this process.

And your life will change as a result. Would you be okay with the idea that making a croquette at home could affect your homeowner’s insurance? Would you feel that AI is interfering too much with people?

If AI’s intervention means your insurance premiums go down, your life is easier, and you have more wealth, I think you’d be okay with that.

Still with the electricity analogy, if AI is everywhere in the future, we can forget about AI - we just need to have a vague idea of what behaviors are good and bad without having to count the numbers behind them. the AI will automatically lead us to more good behaviors, and maybe society as a whole will be better off for it.

That’s all we have to say about the book Power and Prediction. With the recent surge in AI and new ideas coming out of the woodwork, we’ll continue with this feature, but perhaps from time to time, so stay tuned.

Highlights

- to use AI it is better for the whole society to coordinate together and everyone use AI.

- Applying AI may amplify social fluctuations in a moment, we’d better be careful.

- a good way to reduce the social vibration of AI is to simulate it first.

- the trend now is to move from individual companies using AI for individual tasks, to systematic use of AI, to a whole society revolving around AI. aI will make our society smarter. There is a huge amount of work that can be done by both companies and government departments in this process.