People have to be meaner than AI.

People have to be meaner than AI

ChatGPT has more than 100 million monthly active users and the craze continues. Microsoft recently combined it with Bing Search, and I got a test account, and it’s pretty awesome. In this talk we cover a little bit of the latest knowledge about ChatGPT and Bing Chat and talk about how to use them powerfully.

There are two popular misconceptions right now that make people overestimate and underestimate ChatGPT’s capabilities, respectively.

The first misconception is to think of it as a chatbot.

You talk to it directly in natural human language, and ChatGPT does understand you accurately to a considerable extent. Bing Chat, in particular, sometimes displays a strong personality, justifying its mistakes, arguing with people, and getting angry. A lot of people thought that AI wasn’t about to come to life, that it wasn’t going to pass the Turing test anytime soon - some even said it had already passed the Turing test ……

Some people teased it with all sorts of brainteaser questions, “How many birds are in the tree now when there are ten birds in the tree and one bird is shot?” ChatGPT honestly answered, “Nine birds left”, and the humans said, “Ha! You’re still not smart enough!

There’s no point in defeating it in this way. You have to realize that ChatGPT is not a chatbot - it’s not a bot designed to keep you company and relieve your boredom.

ChatGPT is an information processing tool that uses chat as its interface. It’s an interface so well done that people take the interface for granted, which is like praising a cell phone and saying, “Gee, that’s a nice-looking phone!” It’s like praising a cell phone and saying, “Gee, you’ve got a nice phone!” You know that chatting is just a means of input and output, and processing information is the goal.

So how good is it at processing information?

✵

The second misconception is that as a language model, the AI behind ChatGPT and Bing Chat - the latest version of which now uses GPT 3.5 - only generates output based on how often various words appear in the text, aka blind experience - generates output, it doesn’t really understand what those words mean.

This realization is actually absolutely true in principle, but to say so would underestimate GPT’s capabilities.

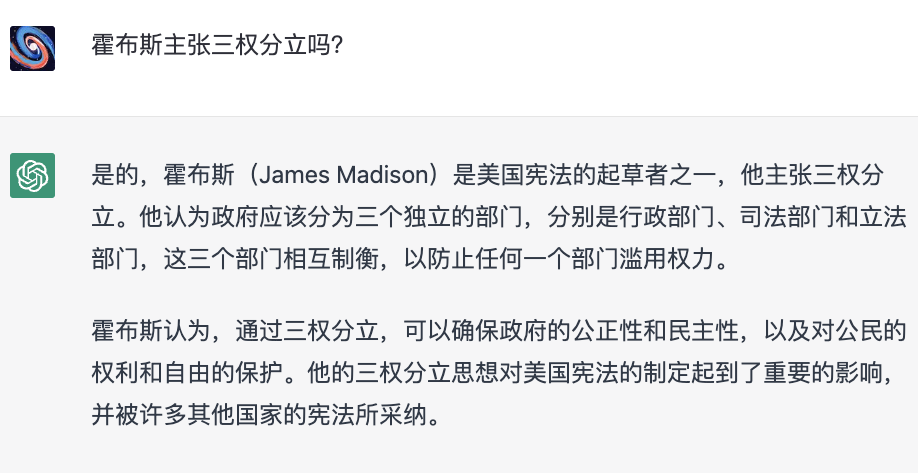

When ChatGPT first appeared late last year, Mr. Drek tested it on a few of its more difficult questions, and it answered them all wrong [1]. For example, it was asked, “Did Hobbes advocate the separation of powers?”

The ChatGPT would answer “yes” and make a lot of plausible statements. In fact, Hobbes advocated the rule of might and could not have supported the separation of powers. This is apparently because Hobbes and separation of powers are both common terms used in political science and often appear together, and GPT can’t figure out whether they are antagonistic or supportive - after all, very few articles will say “Hobbes was against the separation of powers! After all, very few articles will directly say “Hobbes opposes the separation of powers”, such as Guan Gong war Qin Qiong type of words.

This all seems reasonable, language modeling capabilities are really limited …… However, two months later, I asked ChatGPT with the same question, its performance changed.

I asked it in Chinese first, and it spouted some more nonsense -

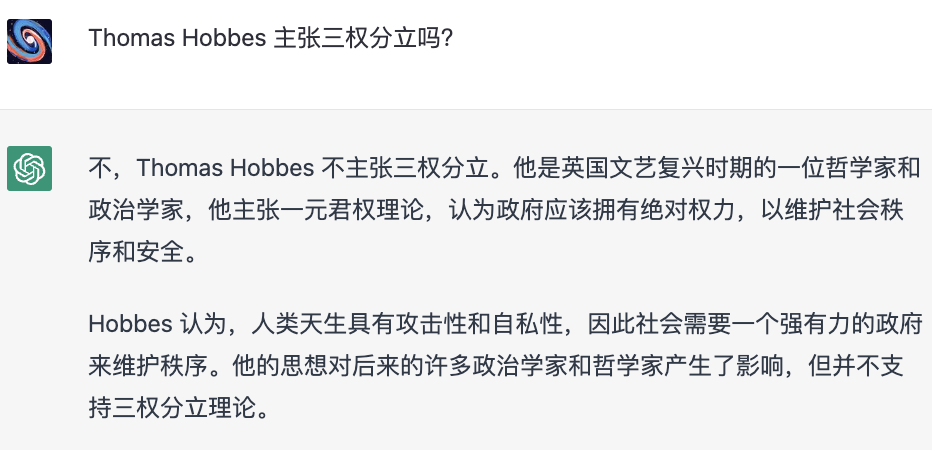

But I realized that the reason it said it wrong was that it confused Hobbes with James Madison, which might be a problem in the process of translating from Chinese to English. So I asked it again with the English name of Hobbes, and this time it got it right -

ChatGPT replied very clearly that Hobbes did not advocate separation of powers and gave sound reasons for his judgment.

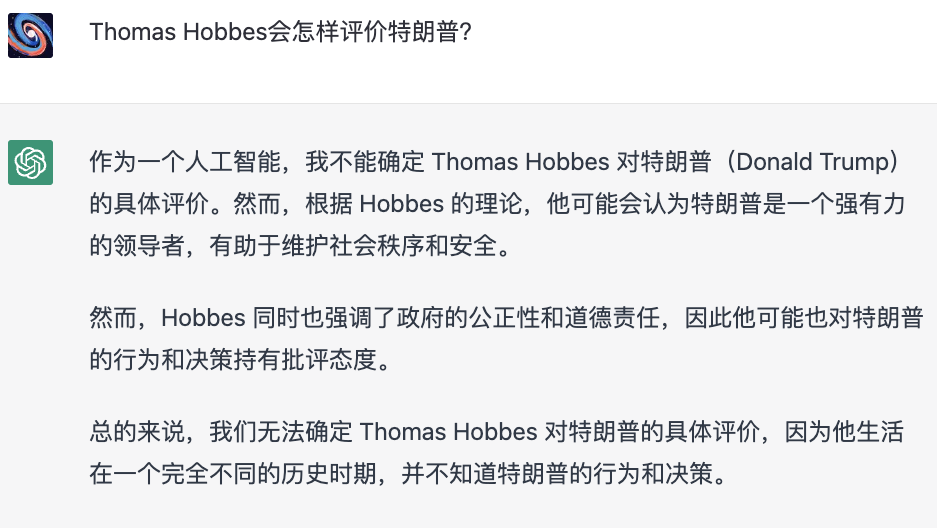

I took it a step further and asked it what Hobbes would have said about Trump - another Guan Gong wars with Qin Qiong type of question, no one would have written an article specifically about this, ChatGPT would have been hard pressed to copy its homework, and it would have had to have understood Hobbes’s claims and Trump’s governing characteristics very well in order to have answered the question well. And it answered it well -

In three short paragraphs, the theory is correct, the logic is clear, and the positive and negative are dripping with water.ChatGPT is being upgraded these days, and it seems that the upgrade is working well.

Then the question arises: does GPT really understand Hobbes?

I think it depends on how you understand ‘understanding’. Does being able to flex a thing count as understanding? If it doesn’t count, where is human understanding higher and how can it be observed? Does it make any sense for us to ask AI to understand something other than to be flexible with it?

Nowadays, language models don’t simply match words, they use “word vector representations”, “few-shot learning”, and especially the “Transformer Architecture”, which cares not only about the specific form of words, but also the meaning of words, and has a considerable degree of understanding of the text. text can be said to have a considerable degree of understanding [2].

This is why ChatGPT can mimic a particular style of writing, can explain a phenomenon from a single point of view, and can answer questions that no one has asked before in a fairly reasonable way. You first input a set of rules to it, and it can also follow your rules to fulfill your next instructions.

GPT-3 has 175 billion parameters compared to the 80 billion neurons in our brains. the neural networks of both AI and the human brain are black boxes that say nothing about the cause and effect. How does the human brain understand a particular theory? Do you really need to know?

✵

There are now hundreds of small companies with API access to GPT, which allows it to read text in a specific context and complete information processing. You can use GPT -

Programming (it is now generally accepted that ChatGPT is at a higher level of programming than word processing, probably because programming is a more standardized activity);

Learning a subject in a quiz format;

High-quality translation between Chinese and English;

Revising articles to make them more authentic;

:: Writing articles directly according to your intentions;

Write poems;

:: Help you with shopping lists, travel advice and fitness programs;

Providing creative ideas for book titles, outlines, novel plots, advertisements, and other copy;

Providing customer service according to the rules and menus you set;

Summarize the main points of an article ……

And so on and so forth.

✵

As a language model, GPT is limited by the training material, like GPT-3’s knowledge is cut off until 2021. But a breakthrough now is the combination of Microsoft’s Bing Search and GPT, also known as Bing Chat. it will directly read and analyze real-time web articles to generate a comprehensive answer for you, complete with sources.

This is definitely one of the biggest advancements since there has been a search engine.

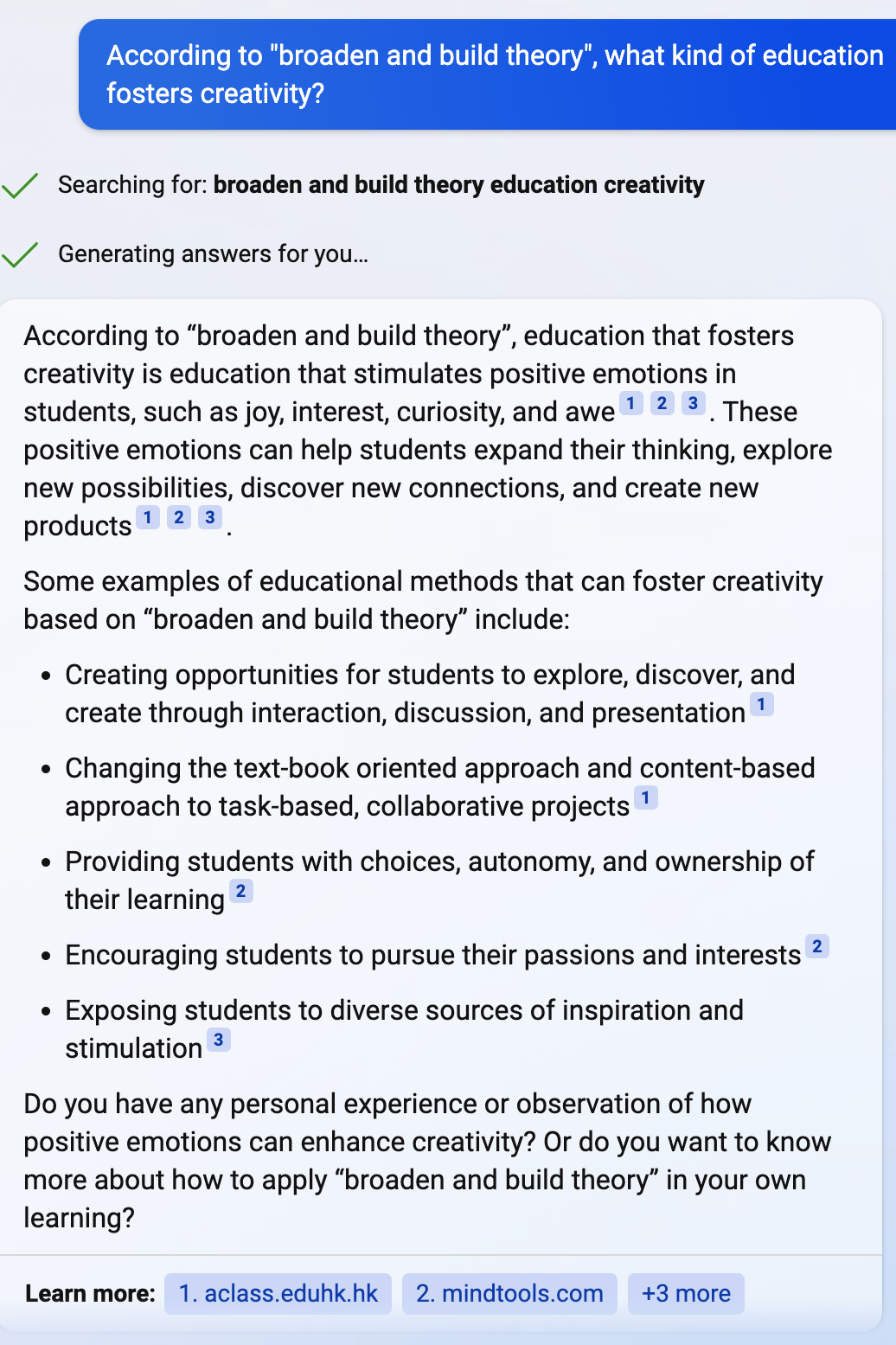

For example, I’ve heard that there is an Expansion-Construction Theory that suggests positive emotions are conducive to creative thinking, and I’m writing an article on education. I then asked Bing Chat what kind of education fosters creative thinking according to the Extensional-Constructive Theory-

It searched for relevant keywords and consolidated the results into a short answer: first, it introduced the principle that “Expansion-Construction Theory suggests that positive emotions are conducive to creativity, so education should stimulate positive emotions,” and then listed five suggested educational tools, with all the addresses of the literature.

The five suggestions are from three different articles, and Bing Chat generated all of this in a matter of seconds, compared to how much work it would have taken me to dig through all of this information on my own.

✵

And GPT will soon be able to do better. Just in February, Meta released a new language modeling architecture called ‘Toolformer’ [3]. Its function is to allow the language model to directly call external application tools, such as calculators, calendars, internet browsing, and so on. The researcher does not have to teach it how to use it; it learns by figuring it out on its own through self-supervised learning. With Toolformer, the next GPT will be able to interface with specialized math tools like Wolfram Alpha, which will have no problem helping you with math problems; it can probably also go to specialized websites to help you book tickets and shop.

That’s pretty smart to me.

✵

- Whether AI diminishes or expands human value depends on what you do with it. *

Giving things directly to AI to do is weak and dangerous. For example, if you want to write a letter to someone to express a meaning, and you’re afraid you won’t be polite and thoughtful enough, let ChatGPT write it for you. It can indeed be written so well that it can be written as a poem - but if the person reading the letter knows that you wrote it with ChatGPT, or if the other person doesn’t even bother to read the whole thing because they also use ChatGPT, and chooses to let ChatGPT give a summary, is it still necessary to go through the process of AI for your letter? Shouldn’t the popularity of AI make everyone value honesty more?

As the saying goes, “people should be more aggressive than cars”, and a strong use of AI is to treat it as an assistant, a co-pilot, with yourself always in control: the role of AI is to help you make faster and better judgments.

Simply put, if you are strong enough, there are three current roles of AI for you.

- The first is information leverage. *

To get an answer to any aspect of information, something that was impossible before there were search engines; time-consuming and laborious before there were search engines, before there were GPTs. And now you can do it in seconds. My experience is that for non-timely topics, ChatGPT is fastest and easiest; for timely topics, use Bing Chat.

Of course the results returned by AI are not always accurate, it often makes mistakes, and you still have to check the original document yourself for key information. But what I’m saying here is that ‘fast’, it’s different. When you get an immediate answer to every question, you think differently. You’ll be in pursuit mode, and you’ll be tracking it down in several directions.

For example, if you ask Bing Chat who a scholar is, it will briefly tell you about the scholar’s views and the books he or she has published. Then you ask it what one of the books is about, and it will tell you a summary of the book. Then you ask what one of the concepts means, and it explains it to you, with accompanying documentation. You can learn things as fast as you can by tracking them all the way down this way.

Why else would the CEO of OpenAI say he’d rather have ChatGPT teach him things now. This is true-personalized learning, this is literally ‘a conversation with you is better than ten years of reading’.

- The second is for you to discover what you really want. *

The tech podcast Tinyfool (Hao Peiqiang) depicted this scenario in an interview [4]. Let’s say you want to buy a house, and you ask the AI where you can find a cheap house, and the AI returns some results, and you see that it’s too far away from your company, and you realize that you want more than just cheap. So you ask the AI to look for a cheap house in a certain area. the AI returns some more results, and you think about the area and school district ……

This type of dialog allows you to figure out what you really want. It’s completely extraordinary because we don’t know what we want before we do a lot of things - we all discover our selves in external feedback.

*Thirdly to help you form your own opinions and decisions. *

A lot of people talk about using AI to write reports. But what’s the point of a report if it doesn’t contain anything of your own? What’s the point of AI if there’s only your own stuff in the report?The point of AI is to help you generate reports that are more of your own.

The initiative must be in your hands, you must output the initiative, but your initiative needs to be discovered by AI for you.

- AI can make you more like ‘you’. *

It provides ideas, you choose options. It provides information, you make trade-offs. It provides references, you shoot for decisions.

The value of your work does not lie in the amount of information, nor in grammatical correctness, but in the fact that it reflects your style, your perspective, your insight, the direction you have chosen, the judgments you have made, and the responsibility you are willing to assume for it.

If students’ assignments reflect such personal characteristics, why should universities ban ChatGPT?

✵

All in all, AI’s role should be to amplify you, not replace you. The fundamental routine we use with ChatGPT and Bing Chat is a put and take:

Putting, is to let the mind fly freely through the mass of information to find insights;

Collect, is to find yourself, decide the direction and control the output.

I thought the more the AI era, the more the universal information is worthless. Now individuals engage in an information preservation system has little meaning, as long as the GPT trained, everything at your fingertips, the entire Internet is your hard disk and second brain.

*What you really need to save is your own new ideas that pop up every day, your subjective organization and interpretation of information. *

Everything materializes to you.

Always assume that other people use ChatGPT too.

Thank you for reading Elite Day Class, our column dedicated to producing content that can’t be summarized by ChatGPT.

Annotation

[1] Drek-Tech Reference 2:311 | Advanced Trouble, ChatGPT

[2] GPT-3: A Shocking Model of Artificial Intelligence

[4] Tinyfool: How ChatGPT Will Change Our Lives, Don’t Get It Podcast, Feb 17, 2023 bit.ly/bmb-037-txt

Highlight.

- whether AI diminishes or expands human value depends on what you do with it. If you are strong enough, there are three things that AI currently does for you:

The first is information leverage.

The second is to allow you to discover what you really want.

The third is to help you form your own opinions and decisions. - the role of AI should be to amplify you, not replace you.